Web search and browsing

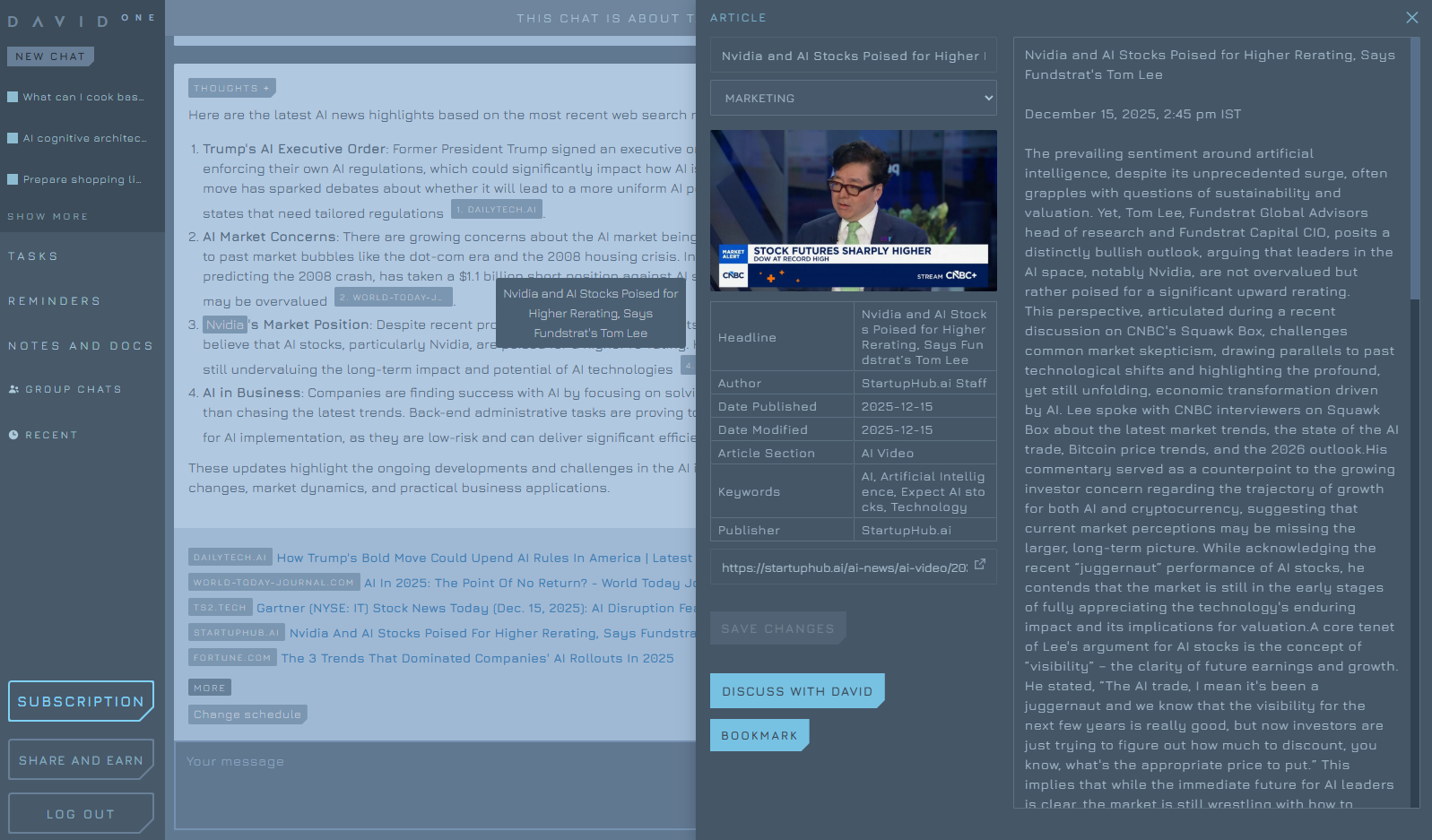

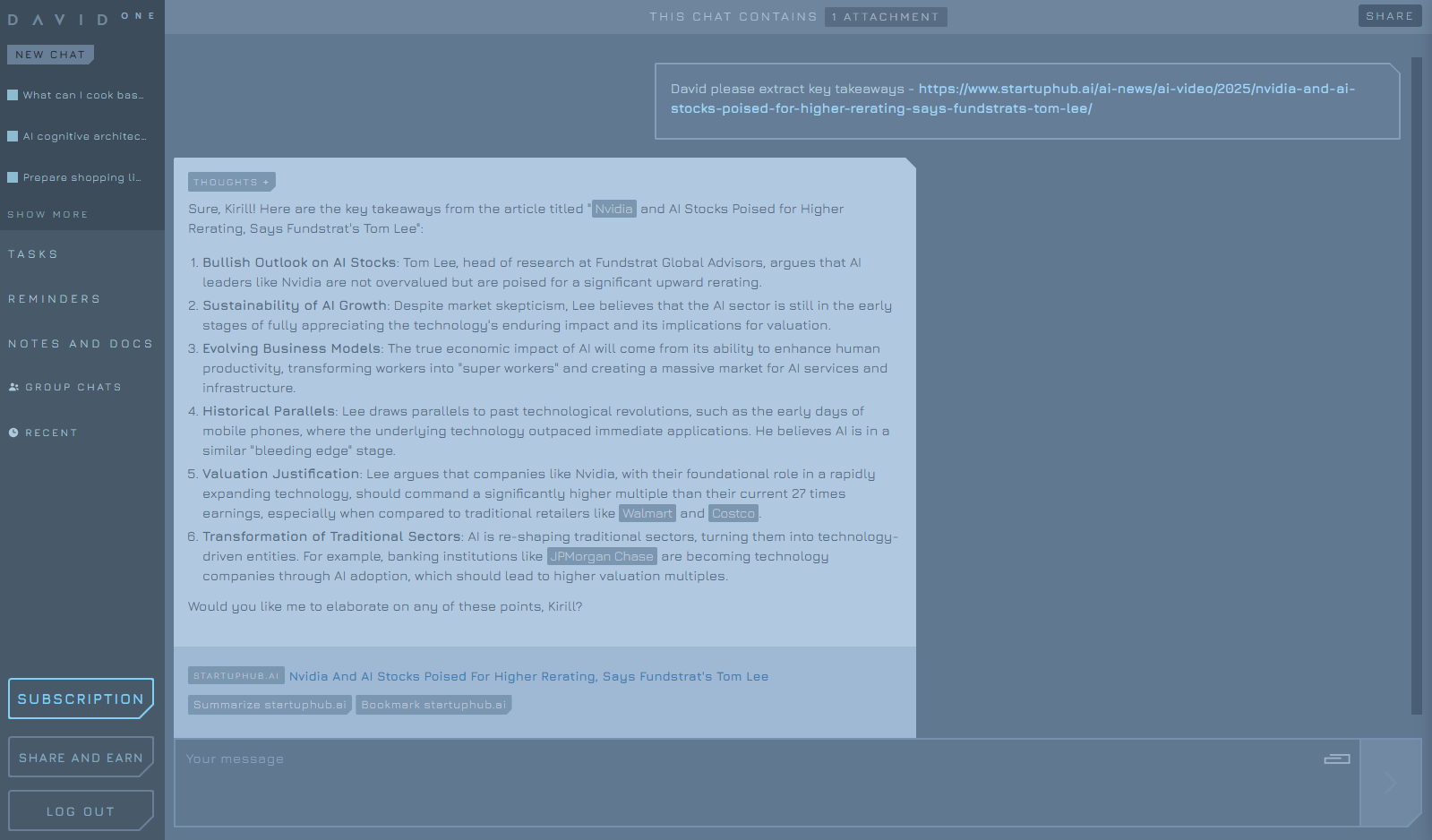

David can search the web to provide more up-to-date answers with cite notes to sources

Ask questions and summarize external web pages to save time

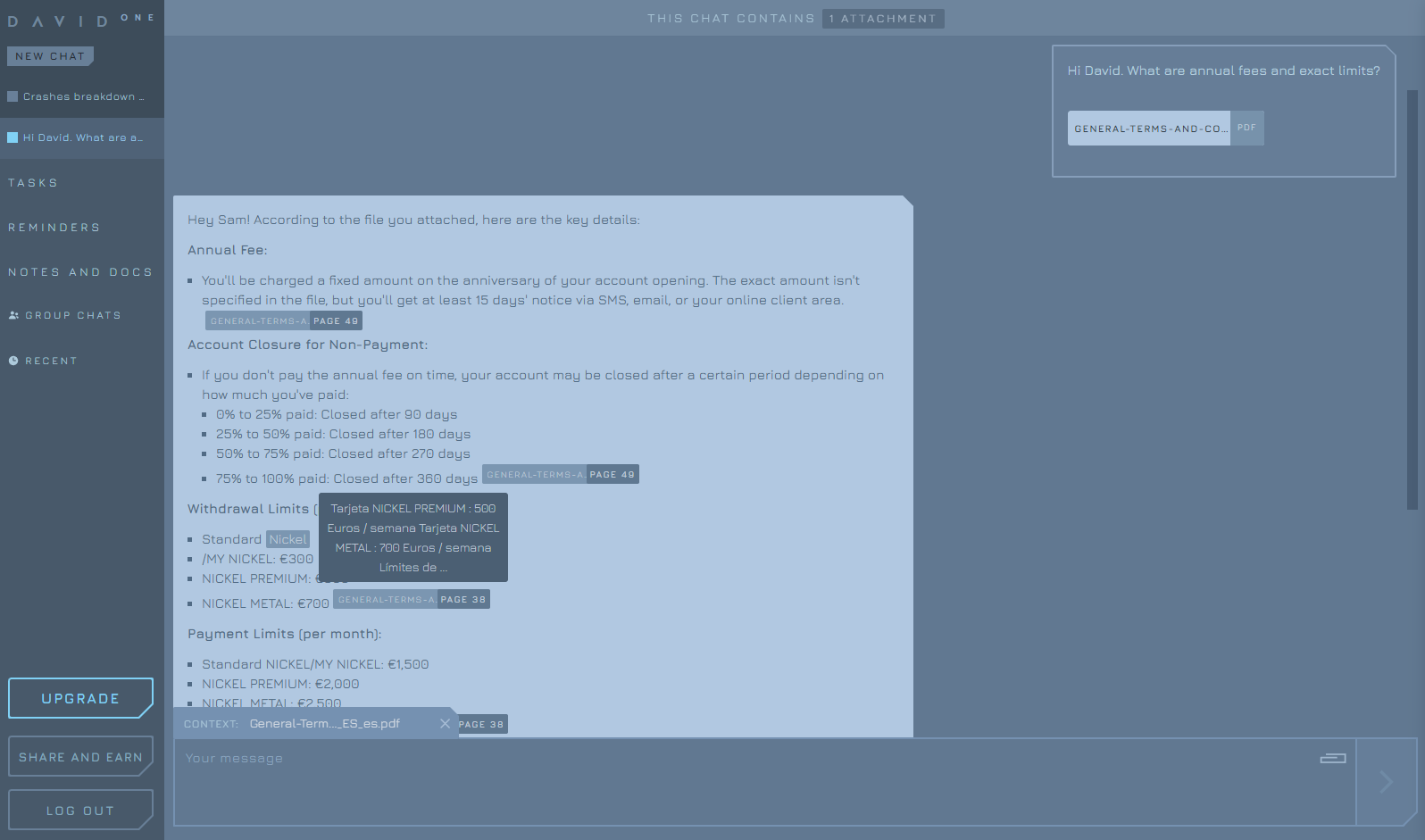

Document analysis

Quickly find information in files in any language with page and paragraph level cite notes

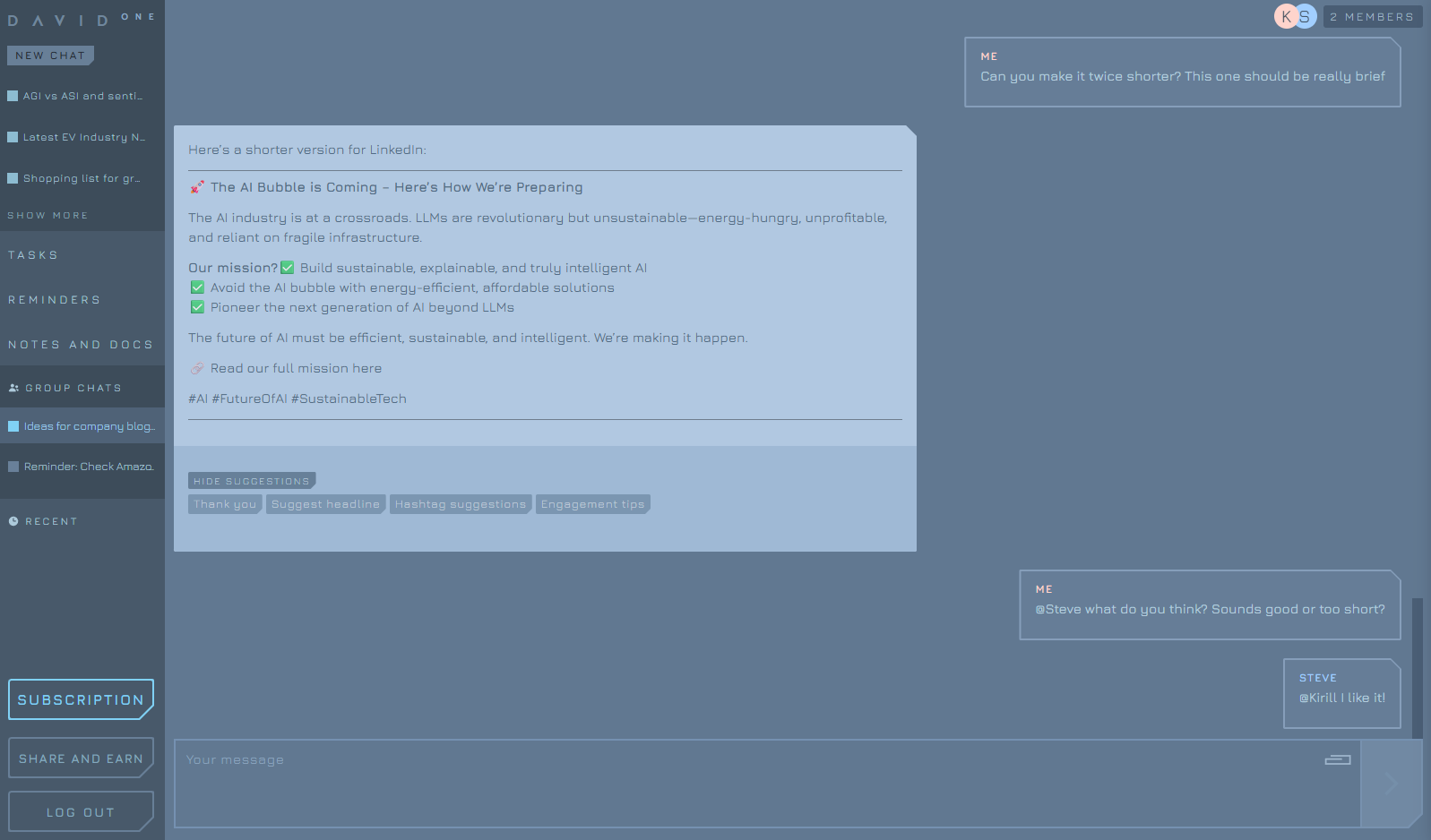

Group chats

Create group chats with your friends or co-workers to work with David together

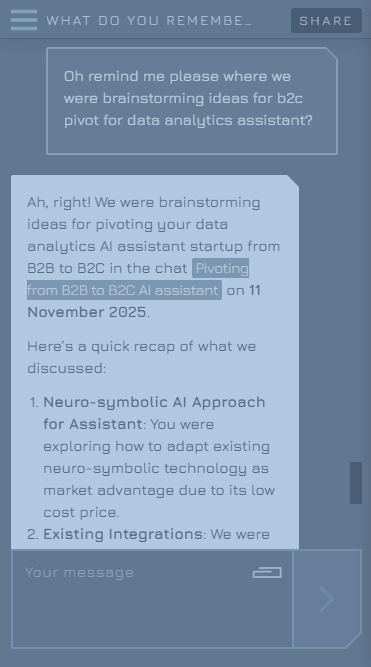

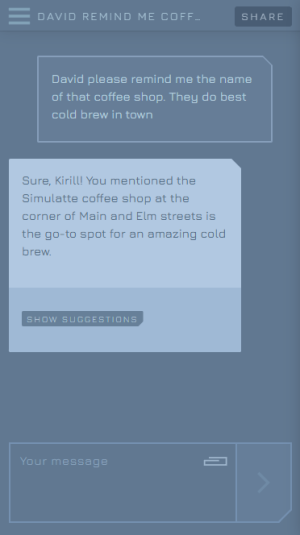

Memory beyond current chat

With brain-inspired memory David is even more personalized and remembers important context across your chats

Productivity in mind

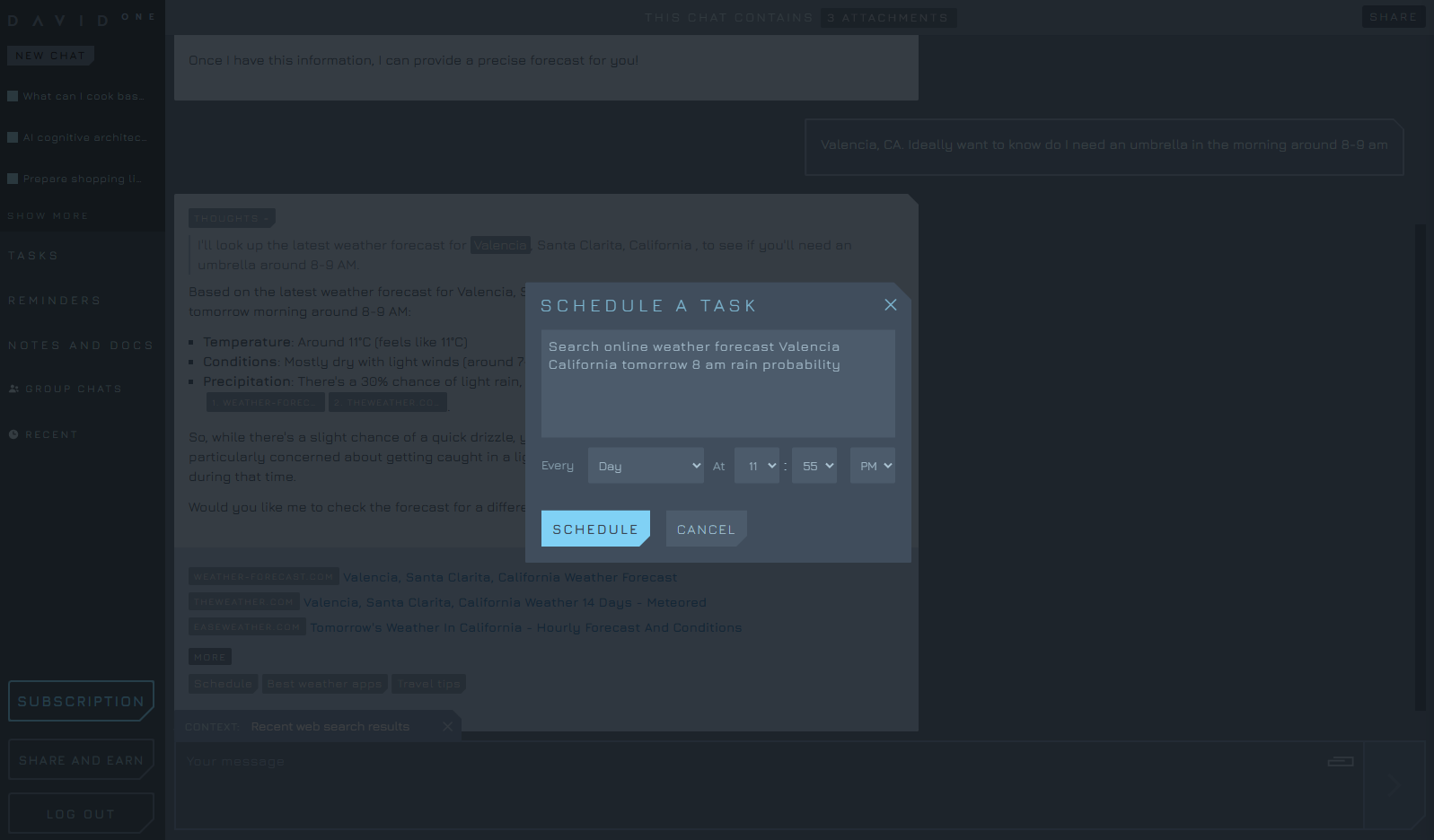

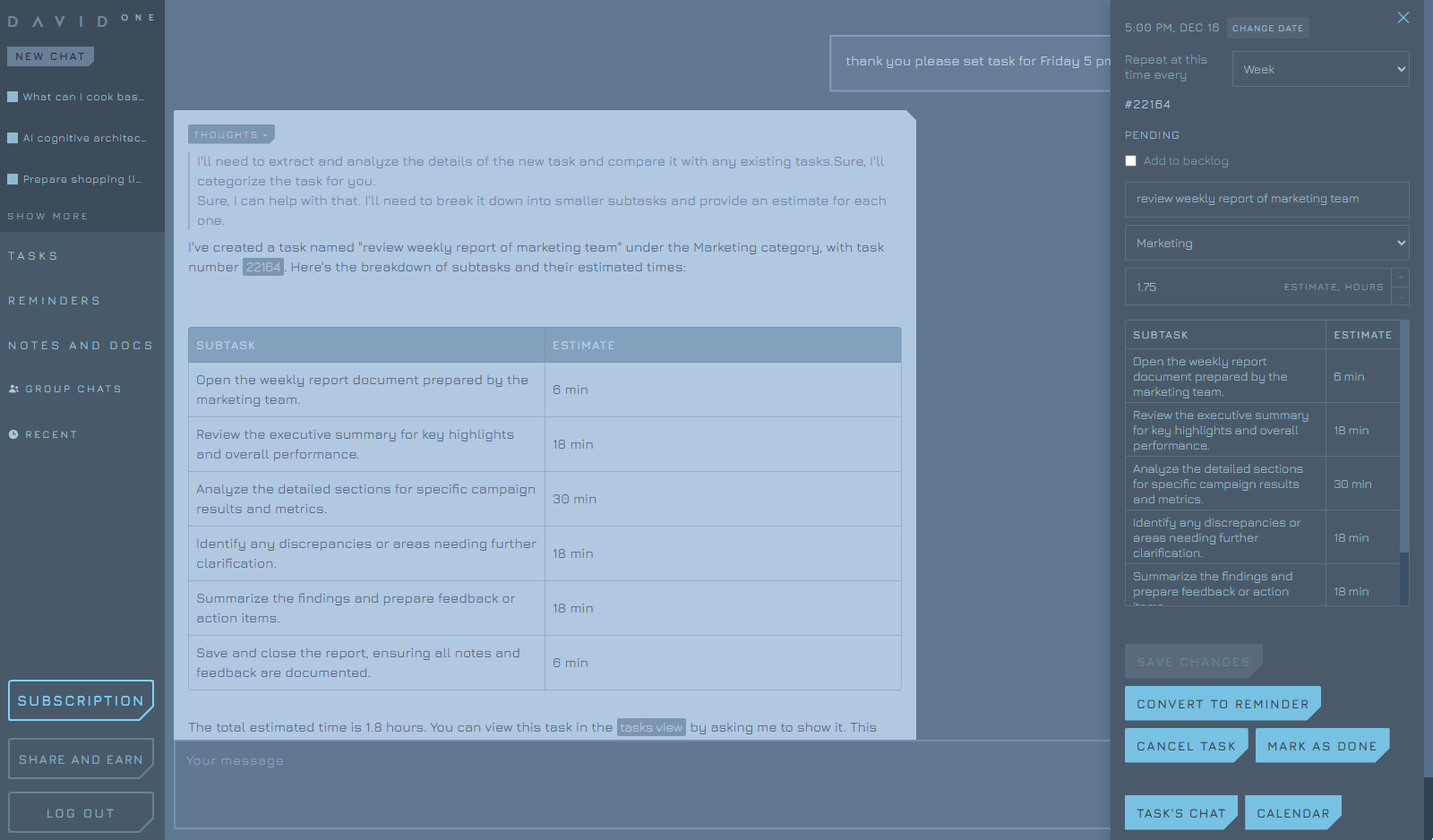

Run tasks on schedule in background

Manage your routine with built-in todo list and task breakdown, reminders and notes

Beyond the text

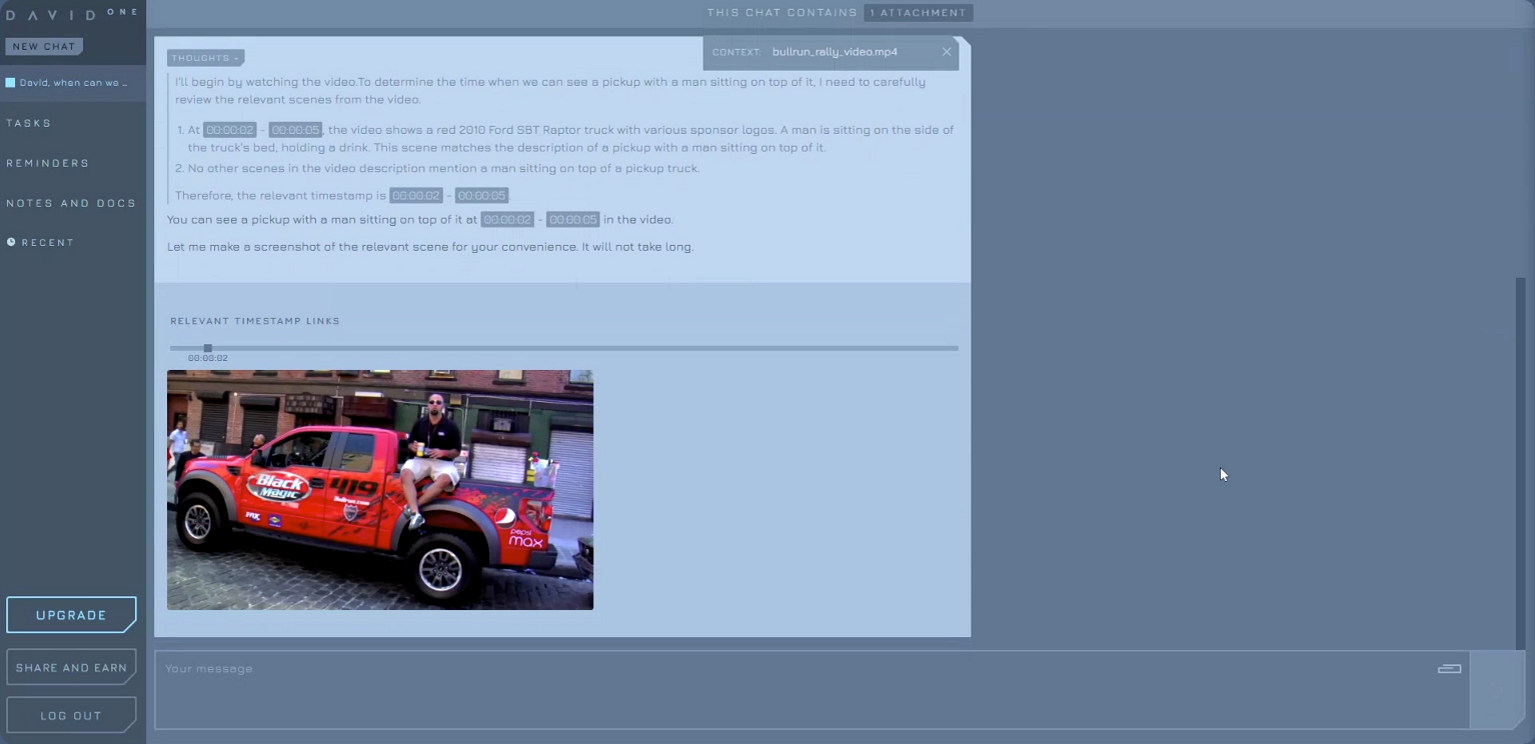

David can watch videos to answer questions, summarize and find specific scenes with exact time

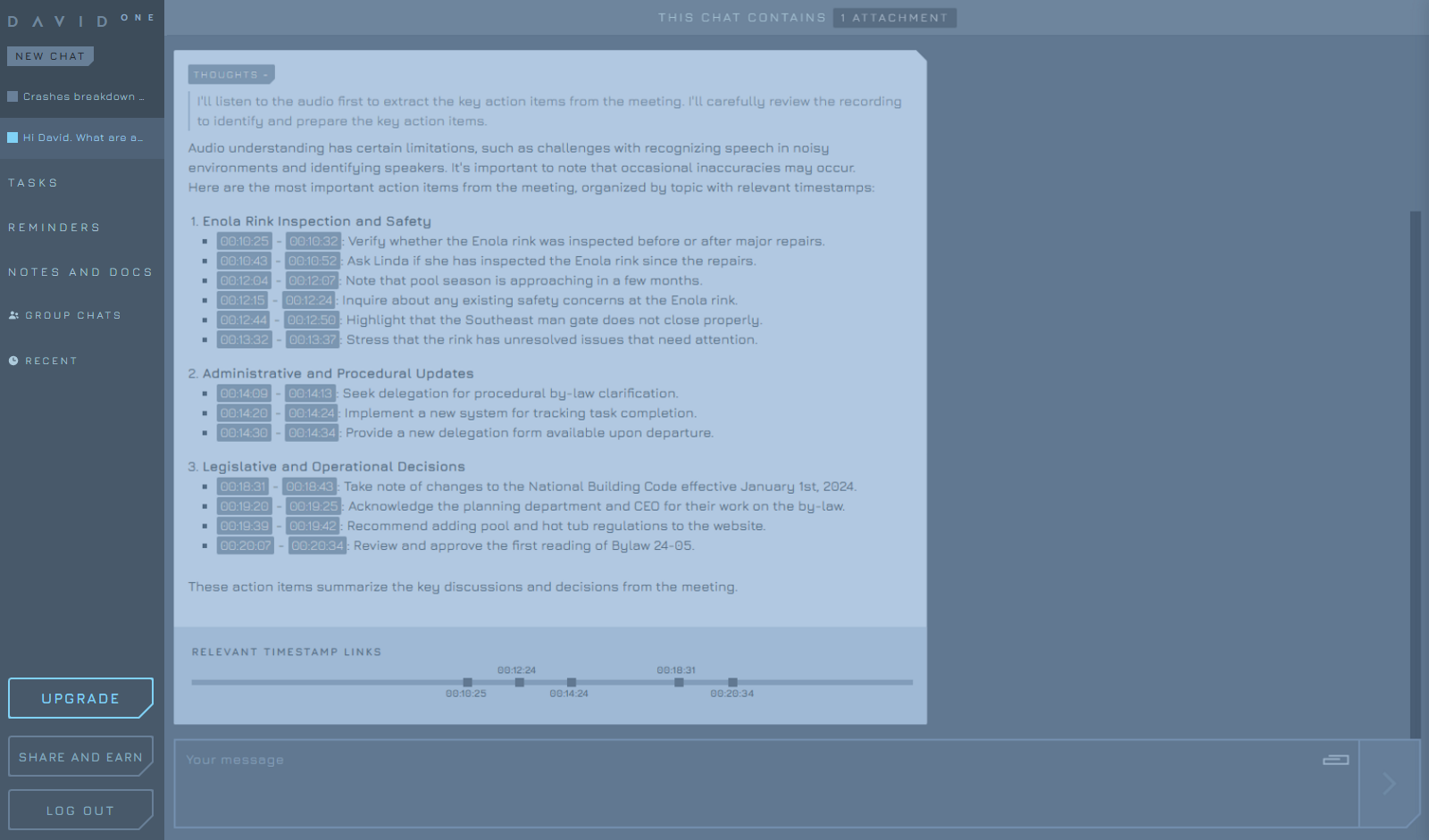

David can prepare meeting notes, summarize, answer questions and find specific information in audio

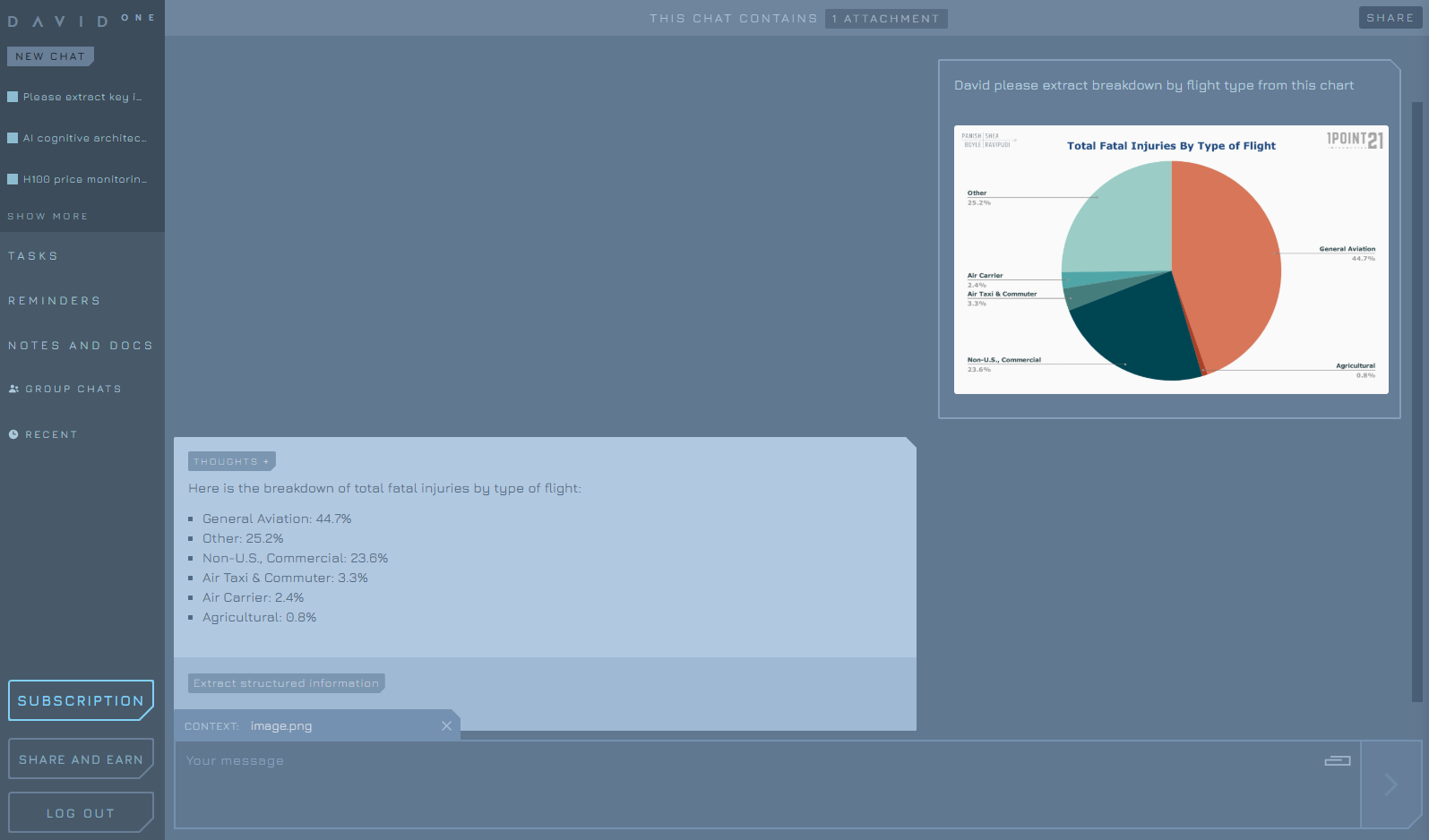

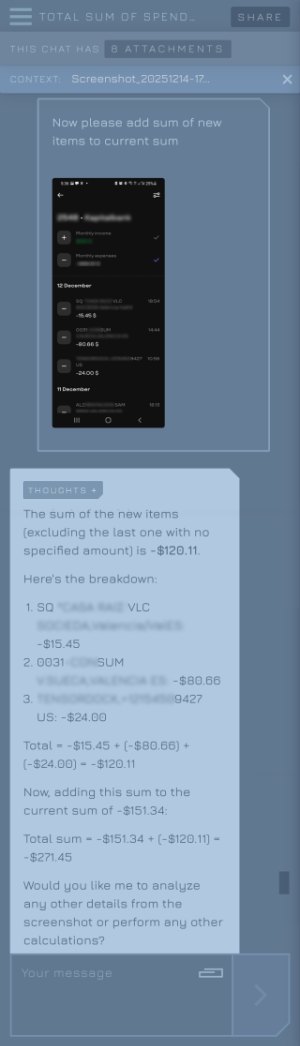

David can help analyze and find information or read text from screenshots, charts, photos or other images